Probar

Probar (Spanish: "to test/prove") is a Rust-native testing framework for WASM games, providing a pure Rust alternative to Playwright/Puppeteer.

Installation

Probar is distributed as two crates:

| Crate | Purpose | Install |

|---|---|---|

| jugar-probar | Library for writing tests | cargo add jugar-probar --dev |

| probador | CLI tool for running tests | cargo install probador |

Library (jugar-probar)

Add to your Cargo.toml:

[dev-dependencies]

jugar-probar = "0.3"

#![allow(unused)] fn main() { use jugar_probar::prelude::*; }

CLI (probador)

cargo install probador

# Validate a playbook state machine

probador playbook login.yaml --validate

# Run with mutation testing

probador playbook login.yaml --mutate

# Export state diagram

probador playbook login.yaml --export svg -o diagram.svg

# Start dev server for WASM

probador serve --port 8080

Features

- Browser Automation: Chrome DevTools Protocol (CDP) via chromiumoxide

- WASM Runtime Testing: Logic-only testing via wasmtime (no browser overhead)

- Visual Regression: Image comparison for UI stability

- Accessibility Auditing: WCAG compliance checking

- Deterministic Replay: Record and replay game sessions

- Monte Carlo Fuzzing: Random input generation with invariant checking

- Type-Safe Selectors: Compile-time checked entity/component queries

- GUI Coverage: Provable UI element and interaction coverage

Feature Flags

| Feature | Description |

|---|---|

browser | CDP browser automation (chromiumoxide, tokio) |

runtime | WASM runtime testing (wasmtime) |

derive | Type-safe derive macros (probar-derive) |

Why Probar?

| Aspect | Playwright | Probar |

|---|---|---|

| Language | TypeScript | Pure Rust |

| Browser | Required (Chromium) | Optional |

| Game State | Black box (DOM only) | Direct API access |

| CI Setup | Node.js + browser | Just cargo test |

| Zero JS | Violates constraint | Pure Rust |

Design Principles

Probar is built on pragmatic testing principles:

- Poka-Yoke (Mistake-Proofing): Type-safe selectors prevent runtime errors

- Muda (Waste Elimination): Zero-copy memory views for efficiency

- Jidoka (Autonomation): Fail-fast with configurable error handling

- Genchi Genbutsu (Go and See): Abstract drivers allow swapping implementations

- Heijunka (Level Loading): Superblock scheduling for consistent performance

Why Probar?

Probar was created as a complete replacement for Playwright in the Jugar ecosystem.

The Problem with Playwright

- JavaScript Dependency: Playwright requires Node.js and npm

- Browser Overhead: Must download and run Chromium

- Black Box Testing: Can only inspect DOM, not game state

- CI Complexity: Requires browser installation in CI

- Violates Zero-JS: Contradicts Jugar's core constraint

What Probar Solves

Zero JavaScript

Before (Playwright):

├── package.json

├── node_modules/

├── tests/

│ └── pong.spec.ts ← TypeScript!

└── playwright.config.ts

After (Probar):

└── tests/

└── probar_pong.rs ← Pure Rust!

Direct State Access

Playwright treats the game as a black box:

// Can only check DOM

await expect(page.locator('#score')).toHaveText('10');

Probar can inspect game state directly:

#![allow(unused)] fn main() { // Direct access to game internals let score = platform.get_game_state().score; assert_eq!(score, 10); // Check entity positions for entity in platform.query_entities::<Ball>() { assert!(entity.position.y < 600.0); } }

Deterministic Testing

Playwright: Non-deterministic due to browser timing

// Flaky! Timing varies between runs

await page.waitForTimeout(100);

await expect(ball).toBeVisible();

Probar: Fully deterministic

#![allow(unused)] fn main() { // Exact frame control for _ in 0..100 { platform.advance_frame(1.0 / 60.0); } let ball_pos = platform.get_ball_position(); assert_eq!(ball_pos, expected_pos); // Always passes }

Simpler CI

Playwright CI:

- name: Install Node.js

uses: actions/setup-node@v3

- name: Install dependencies

run: npm ci

- name: Install Playwright

run: npx playwright install chromium

- name: Run tests

run: npm test

Probar CI:

- name: Run tests

run: cargo test

Feature Comparison

| Feature | Playwright | Probar |

|---|---|---|

| Language | TypeScript | Pure Rust |

| Browser required | Yes | No |

| Game state access | DOM only | Direct |

| Deterministic | No | Yes |

| CI setup | Complex | Simple |

| Frame control | Approximate | Exact |

| Memory inspection | No | Yes |

| Replay support | No | Yes |

| Fuzzing | No | Yes |

Migration Example

Before (Playwright)

import { test, expect } from '@playwright/test';

test('ball bounces off walls', async ({ page }) => {

await page.goto('http://localhost:8080');

// Wait for game to load

await page.waitForSelector('#game-canvas');

// Simulate gameplay

await page.waitForTimeout(2000);

// Check score changed (indirect verification)

const score = await page.locator('#score').textContent();

expect(parseInt(score)).toBeGreaterThan(0);

});

After (Probar)

#![allow(unused)] fn main() { #[test] fn ball_bounces_off_walls() { let mut platform = WebPlatform::new_for_test(WebConfig::default()); // Advance exactly 120 frames (2 seconds at 60fps) for _ in 0..120 { platform.advance_frame(1.0 / 60.0); } // Direct state verification let state = platform.get_game_state(); assert!(state.ball_bounces > 0, "Ball should have bounced"); assert!(state.score > 0, "Score should have increased"); } }

Performance Comparison

| Metric | Playwright | Probar |

|---|---|---|

| Test startup | ~3s | ~0.1s |

| Per-test overhead | ~500ms | ~10ms |

| 39 tests total | ~45s | ~3s |

| CI setup time | ~2min | 0 |

| Memory usage | ~500MB | ~50MB |

When to Use Each

Use Probar for:

- Unit tests

- Integration tests

- Deterministic replay

- Fuzzing

- Performance benchmarks

- CI/CD pipelines

Use Browser Testing for:

- Visual regression (golden master)

- Cross-browser compatibility

- Real user interaction testing

- Production smoke tests

Probar Quick Start

Get started with Probar testing in 5 minutes.

Installation

Probar is distributed as two crates:

| Crate | Purpose | Install |

|---|---|---|

| jugar-probar | Library for writing tests | cargo add jugar-probar --dev |

| probador | CLI tool | cargo install probador |

Add the Library

[dev-dependencies]

jugar-probar = "0.3"

Install the CLI (Optional)

cargo install probador

Write Your First Test

#![allow(unused)] fn main() { use jugar_probar::prelude::*; #[test] fn test_game_initializes() { // Create test platform let config = WebConfig::new(800, 600); let mut platform = WebPlatform::new_for_test(config); // Run initial frame let output = platform.frame(0.0, "[]"); // Verify game started assert!(output.contains("commands")); } }

Run Tests

# Run all tests

cargo test

# With verbose output

cargo test -- --nocapture

# Using probador CLI

probador test

Test Structure

Basic Assertions

#![allow(unused)] fn main() { use jugar_probar::Assertion; #[test] fn test_assertions() { // Equality let eq = Assertion::equals(&42, &42); assert!(eq.passed); // Range let range = Assertion::in_range(50.0, 0.0, 100.0); assert!(range.passed); // Boolean let truthy = Assertion::is_true(true); assert!(truthy.passed); // Approximate equality (for floats) let approx = Assertion::approx_eq(3.14, 3.14159, 0.01); assert!(approx.passed); } }

GUI Coverage

#![allow(unused)] fn main() { use jugar_probar::gui_coverage; #[test] fn test_gui_coverage() { let mut gui = gui_coverage! { buttons: ["start", "pause", "quit"], screens: ["menu", "game", "game_over"] }; // Record interactions gui.click("start"); gui.visit("menu"); gui.visit("game"); // Check coverage println!("{}", gui.summary()); assert!(gui.meets(50.0)); // At least 50% coverage } }

Testing Game Logic

#![allow(unused)] fn main() { #[test] fn test_ball_movement() { let mut platform = WebPlatform::new_for_test(WebConfig::default()); // Get initial position let initial_pos = platform.get_ball_position(); // Advance 60 frames (1 second) for _ in 0..60 { platform.advance_frame(1.0 / 60.0); } // Ball should have moved let new_pos = platform.get_ball_position(); assert_ne!(initial_pos, new_pos); } }

Testing Input

#![allow(unused)] fn main() { #[test] fn test_paddle_responds_to_input() { let mut platform = WebPlatform::new_for_test(WebConfig::default()); let initial_y = platform.get_paddle_y(Player::Left); // Simulate pressing W key platform.key_down("KeyW"); for _ in 0..30 { platform.advance_frame(1.0 / 60.0); } platform.key_up("KeyW"); // Paddle should have moved up let new_y = platform.get_paddle_y(Player::Left); assert!(new_y < initial_y); } }

Using probador CLI

# Validate playbook state machines

probador playbook login.yaml --validate

# Export state diagram as SVG

probador playbook login.yaml --export svg -o diagram.svg

# Run mutation testing

probador playbook login.yaml --mutate

# Generate coverage reports

probador coverage --html

# Watch mode with hot reload

probador watch tests/

# Start dev server for WASM

probador serve --port 8080

Examples

Run the included examples:

# Deterministic simulation with replay

cargo run --example pong_simulation -p jugar-probar

# Locator API demo

cargo run --example locator_demo -p jugar-probar

# Accessibility checking

cargo run --example accessibility_demo -p jugar-probar

# GUI coverage demo

cargo run --example gui_coverage -p jugar-probar

Example Output

=== Probar Pong Simulation Demo ===

--- Demo 1: Pong Simulation ---

Initial state:

Ball: (400.0, 300.0)

Paddles: P1=300.0, P2=300.0

Score: 0 - 0

Simulating 300 frames...

Final state after 300 frames:

Ball: (234.5, 412.3)

Paddles: P1=180.0, P2=398.2

Score: 2 - 1

State valid: true

--- Demo 2: Deterministic Replay ---

Recording simulation (seed=42, frames=500)...

Completed: true

Final hash: 6233835744931225727

Replaying simulation...

Determinism verified: true

Hashes match: true

Next Steps

- Assertions - All assertion types

- Simulation - Deterministic simulation

- Fuzzing - Random testing

- Coverage Tooling - Code coverage

- CLI Reference - Full probador command reference

Probar: WASM-Native Game Testing

Probar (Spanish: "to test/prove") is a pure Rust testing framework for WASM games that provides full Playwright feature parity while adding WASM-native capabilities.

Installation

| Crate | Purpose | Install |

|---|---|---|

| jugar-probar | Library for writing tests | cargo add jugar-probar --dev |

| probador | CLI tool | cargo install probador |

Why Probar?

| Aspect | Playwright | Probar |

|---|---|---|

| Language | TypeScript | Pure Rust |

| Browser | Required (Chromium) | Not needed |

| Game State | Black box (DOM only) | Direct API access |

| CI Setup | Node.js + browser | Just cargo test |

| Zero JS | ❌ Violates constraint | ✅ Pure Rust |

Key Features

Playwright Parity

- CSS, text, testid, XPath, role-based locators

- All standard assertions (visibility, text, count)

- All actions (click, fill, type, hover, drag)

- Auto-waiting with configurable timeouts

- Network interception and mobile emulation

WASM-Native Extensions

- Zero-copy memory views - Direct WASM memory inspection

- Type-safe entity selectors - Compile-time verified game object access

- Deterministic replay - Record inputs with seed, replay identically

- Invariant fuzzing - Concolic testing for game invariants

- Frame-perfect timing - Fixed timestep control

- WCAG accessibility - Color contrast and photosensitivity checking

Quick Example

#![allow(unused)] fn main() { use jugar_probar::Assertion; use jugar_web::{WebConfig, WebPlatform}; #[test] fn test_game_starts() { let config = WebConfig::new(800, 600); let mut platform = WebPlatform::new_for_test(config); // Run a frame let output = platform.frame(0.0, "[]"); // Verify output assert!(output.contains("commands")); // Use Probar assertions let assertion = Assertion::in_range(60.0, 0.0, 100.0); assert!(assertion.passed); } }

Running Tests

# All Probar E2E tests

cargo test -p jugar-web --test probar_pong

# Verbose output

cargo test -p jugar-web --test probar_pong -- --nocapture

# Via Makefile

make test-e2e

make test-e2e-verbose

Test Suites

| Suite | Tests | Coverage |

|---|---|---|

| Pong WASM Game (Core) | 6 | WASM loading, rendering, input |

| Pong Demo Features | 22 | Game modes, HUD, AI widgets |

| Release Readiness | 11 | Stress tests, performance, edge cases |

Total: 39 tests

Architecture

Dual-Runtime Strategy

┌─────────────────────────────────┐ ┌─────────────────────────────────┐

│ WasmRuntime (wasmtime) │ │ BrowserController (Chrome) │

│ ───────────────────────── │ │ ───────────────────────── │

│ Purpose: LOGIC-ONLY testing │ │ Purpose: GOLDEN MASTER │

│ │ │ │

│ ✓ Unit tests │ │ ✓ E2E tests │

│ ✓ Deterministic replay │ │ ✓ Visual regression │

│ ✓ Invariant fuzzing │ │ ✓ Browser compatibility │

│ ✓ Performance benchmarks │ │ ✓ Production parity │

│ │ │ │

│ ✗ NOT for rendering │ │ This is the SOURCE OF TRUTH │

│ ✗ NOT for browser APIs │ │ for "does it work?" │

└─────────────────────────────────┘ └─────────────────────────────────┘

Toyota Way Principles

| Principle | Application |

|---|---|

| Poka-Yoke | Type-safe selectors prevent typos at compile time |

| Muda | Zero-copy memory views eliminate serialization |

| Genchi Genbutsu | ProbarDriver abstraction for swappable backends |

| Andon Cord | Fail-fast mode stops on first critical failure |

| Jidoka | Quality built into the type system |

Next Steps

- Why Probar? - Detailed comparison with Playwright

- Quick Start - Get started testing

- Assertions - Available assertion types

- Coverage Tooling - Advanced coverage analysis

Locators

Probar provides Playwright-style locators for finding game elements with full Playwright parity.

Locator Strategy

┌─────────────────────────────────────────────────────────────────┐

│ LOCATOR STRATEGIES │

├─────────────────────────────────────────────────────────────────┤

│ │

│ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ │

│ │ CSS │ │ TestID │ │ Text │ │

│ │ Selector │ │ Selector │ │ Selector │ │

│ │ "button.x" │ │ "submit-btn"│ │ "Click me" │ │

│ └──────┬──────┘ └──────┬──────┘ └──────┬──────┘ │

│ │ │ │ │

│ └────────────┬────┴────────────────┘ │

│ ▼ │

│ ┌──────────────┐ │

│ │ Locator │ │

│ │ Chain │ │

│ └──────┬───────┘ │

│ │ │

│ ┌──────────┼──────────┐ │

│ ▼ ▼ ▼ │

│ ┌────────┐ ┌────────┐ ┌────────┐ │

│ │ filter │ │ and │ │ or │ │

│ │ (opts) │ │ (loc) │ │ (loc) │ │

│ └────────┘ └────────┘ └────────┘ │

│ │

│ SEMANTIC: role, label, placeholder, alt_text │

│ SPATIAL: within_radius, in_bounds, nearest_to │

│ ECS: has_component, component_matches │

│ │

└─────────────────────────────────────────────────────────────────┘

Basic Locators

#![allow(unused)] fn main() { use probar::{Locator, Selector}; // CSS selector let button = Locator::new("button.primary"); // Test ID selector (recommended for stability) let submit = Locator::by_test_id("submit-button"); // Text content let start = Locator::by_text("Start Game"); // Entity selector (WASM games) let player = Locator::from_selector(Selector::entity("player")); }

Semantic Locators (PMAT-001)

Probar supports Playwright's semantic locators for accessible testing:

#![allow(unused)] fn main() { use probar::{Locator, Selector}; // Role selector (ARIA roles) let button = Locator::by_role("button"); let link = Locator::by_role("link"); let textbox = Locator::by_role("textbox"); // Role with name filter (like Playwright's { name: 'Submit' }) let submit = Locator::by_role_with_name("button", "Submit"); // Label selector (form elements by label text) let username = Locator::by_label("Username"); let password = Locator::by_label("Password"); // Placeholder selector let search = Locator::by_placeholder("Search..."); let email = Locator::by_placeholder("Enter email"); // Alt text selector (images) let logo = Locator::by_alt_text("Company Logo"); let avatar = Locator::by_alt_text("Player Avatar"); }

Selector Variants

#![allow(unused)] fn main() { use probar::Selector; // All selector types let css = Selector::css("button.primary"); let xpath = Selector::XPath("//button[@id='submit']".into()); let text = Selector::text("Click me"); let test_id = Selector::test_id("submit-btn"); let entity = Selector::entity("hero"); // Semantic selectors let role = Selector::role("button"); let role_named = Selector::role_with_name("button", "Submit"); let label = Selector::label("Username"); let placeholder = Selector::placeholder("Search"); let alt = Selector::alt_text("Logo"); // Combined with text filter let css_text = Selector::CssWithText { css: "button".into(), text: "Submit".into(), }; }

Entity Queries

#![allow(unused)] fn main() { let platform = WebPlatform::new_for_test(config); // Find single entity let player = platform.locate(Locator::id("player"))?; let pos = platform.get_position(player); // Find all matching let coins: Vec<Entity> = platform.locate_all(Locator::tag("coin")); assert_eq!(coins.len(), 5); // First matching let first_enemy = platform.locate_first(Locator::tag("enemy")); }

Locator Operations (PMAT-002)

Probar supports Playwright's locator composition operations:

Filter

#![allow(unused)] fn main() { use probar::{Locator, FilterOptions}; // Filter with hasText let active_buttons = Locator::new("button") .filter(FilterOptions::new().has_text("Active")); // Filter with hasNotText let enabled = Locator::new("button") .filter(FilterOptions::new().has_not_text("Disabled")); // Filter with child locator let with_icon = Locator::new("button") .filter(FilterOptions::new().has(Locator::new(".icon"))); // Combined filters let opts = FilterOptions::new() .has_text("Submit") .has_not_text("Cancel"); }

And/Or Composition

#![allow(unused)] fn main() { use probar::Locator; // AND - both conditions must match (intersection) let active_button = Locator::new("button") .and(Locator::new(".active")); // Produces: "button.active" // OR - either condition can match (union) let clickable = Locator::new("button") .or(Locator::new("a.btn")); // Produces: "button, a.btn" // Chain multiple ORs let any_interactive = Locator::new("button") .or(Locator::new("a")) .or(Locator::new("[role='button']")); }

Index Operations

#![allow(unused)] fn main() { use probar::Locator; // Get first element let first_item = Locator::new("li.menu-item").first(); // Get last element let last_item = Locator::new("li.menu-item").last(); // Get nth element (0-indexed) let third_item = Locator::new("li.menu-item").nth(2); // Chained operations let second_active = Locator::new("button") .and(Locator::new(".active")) .nth(1); }

Compound Locators

#![allow(unused)] fn main() { // AND - must match all let armed_enemy = Locator::new(".enemy") .and(Locator::new(".armed")); // OR - match any let interactable = Locator::new(".door") .or(Locator::new(".chest")); // Combined with index let first_enemy = Locator::new(".enemy").first(); }

Spatial Locators

#![allow(unused)] fn main() { // Within radius let nearby = Locator::within_radius(player_pos, 100.0); // In bounds let visible = Locator::in_bounds(screen_bounds); // Nearest to point let closest_enemy = Locator::nearest_to(player_pos) .with_filter(Locator::tag("enemy")); }

Component-Based Locators

#![allow(unused)] fn main() { // Has specific component let physics_entities = Locator::has_component::<RigidBody>(); // Component matches predicate let low_health = Locator::component_matches::<Health>(|h| h.value < 20); // Has all components let complete_entities = Locator::has_all_components::<( Position, Velocity, Sprite, )>(); }

Type-Safe Locators (with derive)

Using jugar-probar-derive for compile-time checked selectors:

#![allow(unused)] fn main() { use jugar_probar_derive::Entity; #[derive(Entity)] #[entity(id = "player")] struct Player; #[derive(Entity)] #[entity(tag = "enemy")] struct Enemy; // Compile-time verified let player = platform.locate::<Player>()?; let enemies = platform.locate_all::<Enemy>(); }

Waiting for Elements

#![allow(unused)] fn main() { // Wait for entity to exist let boss = platform.wait_for( Locator::id("boss"), Duration::from_secs(5), )?; // Wait for condition platform.wait_until( || platform.locate(Locator::id("door")).is_some(), Duration::from_secs(2), )?; }

Locator Chains

#![allow(unused)] fn main() { // Find children let player_weapon = Locator::id("player") .child(Locator::tag("weapon")); // Find parent let weapon_owner = Locator::id("sword") .parent(); // Find siblings let adjacent_tiles = Locator::id("current_tile") .siblings(); }

Actions on Located Elements

#![allow(unused)] fn main() { let button = platform.locate(Locator::id("start_button"))?; // Get info let pos = platform.get_position(button); let bounds = platform.get_bounds(button); let visible = platform.is_visible(button); // Interact platform.click(button); platform.hover(button); // Check state let enabled = platform.is_enabled(button); let focused = platform.is_focused(button); }

Example Test

#![allow(unused)] fn main() { #[test] fn test_coin_collection() { let mut platform = WebPlatform::new_for_test(config); // Count initial coins let initial_coins = platform.locate_all(Locator::tag("coin")).len(); assert_eq!(initial_coins, 5); // Move player to first coin let first_coin = platform.locate_first(Locator::tag("coin")).unwrap(); let coin_pos = platform.get_position(first_coin); // Simulate movement move_player_to(&mut platform, coin_pos); // Coin should be collected let remaining_coins = platform.locate_all(Locator::tag("coin")).len(); assert_eq!(remaining_coins, 4); // Score should increase let score_display = platform.locate(Locator::id("score")).unwrap(); let score_text = platform.get_text(score_display); assert!(score_text.contains("10")); } }

Wait Mechanisms

Toyota Way: Jidoka (Automation with Human Touch) - Automatic detection of ready state

Probar provides Playwright-compatible wait mechanisms for synchronization in tests.

Running the Example

cargo run --example wait_mechanisms

Load States

Wait for specific page load states:

#![allow(unused)] fn main() { use probar::prelude::*; // Available load states let load = LoadState::Load; // window.onload event let dom = LoadState::DomContentLoaded; // DOMContentLoaded event let idle = LoadState::NetworkIdle; // No requests for 500ms // Each state has a default timeout assert_eq!(LoadState::Load.default_timeout_ms(), 30_000); assert_eq!(LoadState::NetworkIdle.default_timeout_ms(), 60_000); // Get event name for JavaScript assert_eq!(LoadState::Load.event_name(), "load"); assert_eq!(LoadState::DomContentLoaded.event_name(), "DOMContentLoaded"); }

Wait Options

Configure wait behavior with WaitOptions:

#![allow(unused)] fn main() { use probar::prelude::*; // Default options (30s timeout, 50ms polling) let default_opts = WaitOptions::default(); // Custom options with builder pattern let opts = WaitOptions::new() .with_timeout(10_000) // 10 second timeout .with_poll_interval(100) // Poll every 100ms .with_wait_until(LoadState::NetworkIdle); // Access as Duration let timeout: Duration = opts.timeout(); let poll: Duration = opts.poll_interval(); }

Navigation Options

Configure navigation-specific waits:

#![allow(unused)] fn main() { use probar::prelude::*; let nav_opts = NavigationOptions::new() .with_timeout(5000) .with_wait_until(LoadState::DomContentLoaded) .with_url(UrlPattern::Contains("dashboard".into())); }

Page Events

Wait for specific page events (Playwright parity):

#![allow(unused)] fn main() { use probar::prelude::*; // All available page events let events = [ PageEvent::Load, PageEvent::DomContentLoaded, PageEvent::Close, PageEvent::Console, PageEvent::Dialog, PageEvent::Download, PageEvent::Popup, PageEvent::Request, PageEvent::Response, PageEvent::PageError, PageEvent::WebSocket, PageEvent::Worker, ]; // Get event name string assert_eq!(PageEvent::Load.as_str(), "load"); assert_eq!(PageEvent::Popup.as_str(), "popup"); }

Using the Waiter

Wait for URL Pattern

#![allow(unused)] fn main() { use probar::prelude::*; let mut waiter = Waiter::new(); waiter.set_url("https://example.com/dashboard"); let options = WaitOptions::new().with_timeout(5000); // Wait for URL to match pattern let result = waiter.wait_for_url( &UrlPattern::Contains("dashboard".into()), &options, )?; println!("Waited for: {}", result.waited_for); println!("Elapsed: {:?}", result.elapsed); }

Wait for Load State

#![allow(unused)] fn main() { use probar::prelude::*; let mut waiter = Waiter::new(); waiter.set_load_state(LoadState::Load); let options = WaitOptions::new().with_timeout(30_000); // Wait for page to be fully loaded waiter.wait_for_load_state(LoadState::Load, &options)?; // DomContentLoaded is satisfied by Load state waiter.wait_for_load_state(LoadState::DomContentLoaded, &options)?; }

Wait for Navigation

#![allow(unused)] fn main() { use probar::prelude::*; let mut waiter = Waiter::new(); waiter.set_url("https://example.com/app"); waiter.set_load_state(LoadState::Load); let nav_opts = NavigationOptions::new() .with_timeout(10_000) .with_wait_until(LoadState::NetworkIdle) .with_url(UrlPattern::Contains("app".into())); let result = waiter.wait_for_navigation(&nav_opts)?; }

Wait for Custom Function

#![allow(unused)] fn main() { use probar::prelude::*; use std::sync::atomic::{AtomicUsize, Ordering}; use std::sync::Arc; let waiter = Waiter::new(); let options = WaitOptions::new() .with_timeout(5000) .with_poll_interval(50); // Wait for counter to reach threshold let counter = Arc::new(AtomicUsize::new(0)); let counter_clone = counter.clone(); // Simulate async updates std::thread::spawn(move || { for _ in 0..10 { std::thread::sleep(Duration::from_millis(100)); counter_clone.fetch_add(1, Ordering::SeqCst); } }); // Wait until counter >= 5 waiter.wait_for_function( || counter.load(Ordering::SeqCst) >= 5, &options, )?; }

Wait for Events

#![allow(unused)] fn main() { use probar::prelude::*; let mut waiter = Waiter::new(); let options = WaitOptions::new().with_timeout(5000); // Record events as they occur waiter.record_event(PageEvent::Load); waiter.record_event(PageEvent::DomContentLoaded); // Wait for specific event waiter.wait_for_event(&PageEvent::Load, &options)?; // Clear recorded events waiter.clear_events(); }

Convenience Functions

#![allow(unused)] fn main() { use probar::prelude::*; // Wait for condition with timeout wait_until(|| some_condition(), 5000)?; // Simple timeout (discouraged - use conditions instead) wait_timeout(100); // Sleep for 100ms }

Custom Wait Conditions

Implement the WaitCondition trait for custom logic:

#![allow(unused)] fn main() { use probar::prelude::*; // Using FnCondition helper let condition = FnCondition::new( || check_some_state(), "waiting for state to be ready", ); let waiter = Waiter::new(); let options = WaitOptions::new().with_timeout(5000); waiter.wait_for(&condition, &options)?; }

Network Idle Detection

NetworkIdle waits for no network requests for 500ms:

#![allow(unused)] fn main() { use probar::prelude::*; let mut waiter = Waiter::new(); // Simulate pending requests waiter.set_pending_requests(3); // 3 active requests // Network is NOT idle assert!(!waiter.is_network_idle()); // All requests complete waiter.set_pending_requests(0); // After 500ms of no activity, network is idle // (In real usage, this is tracked automatically) }

Error Handling

Wait operations return ProbarResult with timeout errors:

#![allow(unused)] fn main() { use probar::prelude::*; let waiter = Waiter::new(); let options = WaitOptions::new() .with_timeout(100) .with_poll_interval(10); match waiter.wait_for_function(|| false, &options) { Ok(result) => println!("Success: {:?}", result.elapsed), Err(ProbarError::Timeout { ms }) => { println!("Timed out after {}ms", ms); } Err(e) => println!("Other error: {}", e), } }

Best Practices

-

Prefer explicit waits over timeouts

#![allow(unused)] fn main() { // Good: Wait for specific condition waiter.wait_for_load_state(LoadState::NetworkIdle, &options)?; // Avoid: Fixed sleep wait_timeout(5000); } -

Use appropriate polling intervals

#![allow(unused)] fn main() { // Fast polling for quick checks let fast = WaitOptions::new().with_poll_interval(10); // Slower polling for resource-intensive checks let slow = WaitOptions::new().with_poll_interval(200); } -

Set realistic timeouts

#![allow(unused)] fn main() { // Navigation can be slow let nav = NavigationOptions::new().with_timeout(30_000); // UI updates should be fast let ui = WaitOptions::new().with_timeout(5000); } -

Combine with assertions

#![allow(unused)] fn main() { // Wait then assert waiter.wait_for_load_state(LoadState::Load, &options)?; expect(locator).to_be_visible(); }

Example: Full Page Load Flow

#![allow(unused)] fn main() { use probar::prelude::*; fn wait_for_page_ready() -> ProbarResult<()> { let mut waiter = Waiter::new(); // 1. Wait for navigation to target URL let nav_opts = NavigationOptions::new() .with_timeout(30_000) .with_url(UrlPattern::Contains("/app".into())); waiter.set_url("https://example.com/app"); waiter.wait_for_navigation(&nav_opts)?; // 2. Wait for DOM to be ready waiter.set_load_state(LoadState::DomContentLoaded); let opts = WaitOptions::new().with_timeout(10_000); waiter.wait_for_load_state(LoadState::DomContentLoaded, &opts)?; // 3. Wait for network to settle waiter.set_load_state(LoadState::NetworkIdle); waiter.wait_for_load_state(LoadState::NetworkIdle, &opts)?; // 4. Wait for app-specific ready state waiter.wait_for_function( || app_is_initialized(), &opts, )?; Ok(()) } }

Assertions

Probar provides a rich set of assertions for testing game state with full Playwright parity.

Assertion Flow

┌─────────────────────────────────────────────────────────────────┐

│ PROBAR ASSERTION SYSTEM │

├─────────────────────────────────────────────────────────────────┤

│ │

│ ┌──────────┐ ┌──────────────┐ ┌──────────────┐ │

│ │ Input │───►│ Assertion │───►│ Result │ │

│ │ Value │ │ Function │ │ Struct │ │

│ └──────────┘ └──────────────┘ └──────────────┘ │

│ │ │ │

│ ▼ ▼ │

│ ┌──────────────────┐ ┌───────────────┐ │

│ │ • equals() │ │ passed: bool │ │

│ │ • in_range() │ │ message: str │ │

│ │ • contains() │ │ expected: opt │ │

│ │ • matches() │ │ actual: opt │ │

│ └──────────────────┘ └───────────────┘ │

│ │

└─────────────────────────────────────────────────────────────────┘

Playwright-Style Element Assertions (PMAT-004)

Probar supports Playwright's expect() API for fluent assertions:

#![allow(unused)] fn main() { use probar::{expect, Locator}; let button = Locator::new("button#submit"); let checkbox = Locator::new("input[type='checkbox']"); let input = Locator::new("input#username"); // Visibility assertions expect(button.clone()).to_be_visible(); expect(button.clone()).to_be_hidden(); // Text assertions expect(button.clone()).to_have_text("Submit"); expect(button.clone()).to_contain_text("Sub"); // Count assertion expect(Locator::new(".item")).to_have_count(5); // Element state assertions (PMAT-004) expect(button.clone()).to_be_enabled(); expect(button.clone()).to_be_disabled(); expect(checkbox.clone()).to_be_checked(); expect(input.clone()).to_be_editable(); expect(input.clone()).to_be_focused(); expect(Locator::new(".container")).to_be_empty(); // Value assertions expect(input.clone()).to_have_value("john_doe"); // CSS assertions expect(button.clone()).to_have_css("color", "rgb(0, 255, 0)"); expect(button.clone()).to_have_css("display", "flex"); // Class/ID assertions expect(button.clone()).to_have_class("active"); expect(button.clone()).to_have_id("submit-btn"); // Attribute assertions expect(input.clone()).to_have_attribute("type", "text"); expect(button).to_have_attribute("aria-label", "Submit form"); }

Assertion Validation

#![allow(unused)] fn main() { use probar::{expect, Locator, ExpectAssertion}; let locator = Locator::new("input#score"); // Text validation let text_assertion = expect(locator.clone()).to_have_text("100"); assert!(text_assertion.validate("100").is_ok()); assert!(text_assertion.validate("50").is_err()); // Count validation let count_assertion = expect(locator.clone()).to_have_count(3); assert!(count_assertion.validate_count(3).is_ok()); assert!(count_assertion.validate_count(5).is_err()); // State validation (for boolean states) let enabled = expect(locator.clone()).to_be_enabled(); assert!(enabled.validate_state(true).is_ok()); // Element is enabled assert!(enabled.validate_state(false).is_err()); // Element is disabled // Class validation (checks within class list) let class_assertion = expect(locator).to_have_class("active"); assert!(class_assertion.validate("btn active primary").is_ok()); assert!(class_assertion.validate("btn disabled").is_err()); }

Basic Assertions

#![allow(unused)] fn main() { use probar::Assertion; // Equality let eq = Assertion::equals(&actual, &expected); assert!(eq.passed); assert_eq!(eq.message, "Values are equal"); // Inequality let ne = Assertion::not_equals(&a, &b); // Boolean let truthy = Assertion::is_true(condition); let falsy = Assertion::is_false(condition); }

Numeric Assertions

#![allow(unused)] fn main() { // Range check let range = Assertion::in_range(value, min, max); // Approximate equality (for floats) let approx = Assertion::approx_eq(3.14159, std::f64::consts::PI, 0.001); // Greater/Less than let gt = Assertion::greater_than(value, threshold); let lt = Assertion::less_than(value, threshold); let gte = Assertion::greater_than_or_equal(value, threshold); let lte = Assertion::less_than_or_equal(value, threshold); }

Collection Assertions

#![allow(unused)] fn main() { // Contains let contains = Assertion::contains(&collection, &item); // Length let len = Assertion::has_length(&vec, expected_len); // Empty let empty = Assertion::is_empty(&vec); let not_empty = Assertion::is_not_empty(&vec); // All match predicate let all = Assertion::all_match(&vec, |x| x > 0); // Any match predicate let any = Assertion::any_match(&vec, |x| x == 42); }

String Assertions

#![allow(unused)] fn main() { // Contains substring let contains = Assertion::string_contains(&text, "expected"); // Starts/ends with let starts = Assertion::starts_with(&text, "prefix"); let ends = Assertion::ends_with(&text, "suffix"); // Regex match let matches = Assertion::matches_regex(&text, r"\d{3}-\d{4}"); // Length let len = Assertion::string_length(&text, expected_len); }

Option/Result Assertions

#![allow(unused)] fn main() { // Option let some = Assertion::is_some(&option_value); let none = Assertion::is_none(&option_value); // Result let ok = Assertion::is_ok(&result); let err = Assertion::is_err(&result); }

Custom Assertions

#![allow(unused)] fn main() { // Create custom assertion fn assert_valid_score(score: u32) -> Assertion { Assertion::custom( score <= 10, format!("Score {} should be <= 10", score), ) } // Use it let assertion = assert_valid_score(game.score); assert!(assertion.passed); }

Assertion Result

All assertions return an Assertion struct:

#![allow(unused)] fn main() { pub struct Assertion { pub passed: bool, pub message: String, pub expected: Option<String>, pub actual: Option<String>, } }

Combining Assertions

#![allow(unused)] fn main() { // All must pass let all_pass = Assertion::all(&[ Assertion::in_range(x, 0.0, 800.0), Assertion::in_range(y, 0.0, 600.0), Assertion::greater_than(health, 0), ]); // Any must pass let any_pass = Assertion::any(&[ Assertion::equals(&state, &State::Running), Assertion::equals(&state, &State::Paused), ]); }

Game-Specific Assertions

#![allow(unused)] fn main() { // Entity exists let exists = Assertion::entity_exists(&world, entity_id); // Component value let has_component = Assertion::has_component::<Position>(&world, entity); // Position bounds let in_bounds = Assertion::position_in_bounds( position, Bounds::new(0.0, 0.0, 800.0, 600.0), ); // Collision occurred let collided = Assertion::entities_colliding(&world, entity_a, entity_b); }

Example Test

#![allow(unused)] fn main() { #[test] fn test_game_state_validity() { let mut platform = WebPlatform::new_for_test(WebConfig::default()); // Advance game for _ in 0..100 { platform.advance_frame(1.0 / 60.0); } let state = platform.get_game_state(); // Multiple assertions assert!(Assertion::in_range(state.ball.x, 0.0, 800.0).passed); assert!(Assertion::in_range(state.ball.y, 0.0, 600.0).passed); assert!(Assertion::in_range(state.paddle_left.y, 0.0, 600.0).passed); assert!(Assertion::in_range(state.paddle_right.y, 0.0, 600.0).passed); assert!(Assertion::lte(state.score_left, 10).passed); assert!(Assertion::lte(state.score_right, 10).passed); } }

Soft Assertions

Toyota Way: Kaizen (Continuous Improvement) - Collect all failures before stopping

Soft assertions allow you to collect multiple assertion failures without immediately stopping the test. This is useful for validating multiple related conditions in a single test run.

Basic Usage

#![allow(unused)] fn main() { use probar::prelude::*; fn test_form_validation() -> ProbarResult<()> { let mut soft = SoftAssertions::new(); // Collect all validation failures soft.assert_eq("username", "alice", expected_username); soft.assert_eq("email", "alice@example.com", expected_email); soft.assert_eq("role", "admin", expected_role); // Check all assertions at once soft.verify()?; Ok(()) } }

Running the Example

cargo run --example soft_assertions

Retry Assertions

Toyota Way: Jidoka (Built-in Quality) - Automatic retry with intelligent backoff

Retry assertions automatically retry failed conditions with configurable timeout and intervals, perfect for testing asynchronous state changes.

Basic Usage

#![allow(unused)] fn main() { use probar::prelude::*; fn test_async_state() -> ProbarResult<()> { let retry = RetryAssertion::new() .with_timeout(Duration::from_secs(5)) .with_interval(Duration::from_millis(100)); retry.retry_true(|| { // Check condition that may take time to become true check_element_visible() })?; Ok(()) } }

Running the Example

cargo run --example retry_assertions

Equation Verification

Toyota Way: Poka-Yoke (Mistake-Proofing) - Mathematical correctness guarantees

Equation verification validates physics and game math invariants with floating-point tolerance handling.

Basic Usage

#![allow(unused)] fn main() { use probar::prelude::*; fn test_physics() -> ProbarResult<()> { let mut verifier = EquationVerifier::new("physics_test"); // Verify kinematics equation: v = v0 + at let v0 = 10.0; let a = 5.0; let t = 2.0; let v = v0 + a * t; verifier.verify_eq("v = v0 + at", 20.0, v); verifier.verify_in_range("speed", v, 0.0, 100.0); assert!(verifier.all_passed()); Ok(()) } }

Running the Example

cargo run --example equation_verify

Simulation

Probar provides deterministic game simulation for testing, built on trueno's simulation testing framework (v0.8.5+) which implements Toyota Production System principles for quality assurance.

Simulation Architecture

┌─────────────────────────────────────────────────────────────────┐

│ DETERMINISTIC SIMULATION │

├─────────────────────────────────────────────────────────────────┤

│ │

│ ┌──────────┐ ┌─────────────────────────────────────────┐ │

│ │ Seed │────►│ Simulation Loop │ │

│ │ (u64) │ │ ┌─────────────────────────────────────┐ │ │

│ └──────────┘ │ │ Frame 0 ─► Frame 1 ─► ... ─► Frame N │ │

│ │ │ │ │ │ │ │

│ ┌──────────┐ │ │ ▼ ▼ ▼ │ │

│ │ Config │────►│ │ [Input] [Input] [Input] │ │

│ │ (frames) │ │ │ │ │ │ │ │

│ └──────────┘ │ │ ▼ ▼ ▼ │ │

│ │ │ [State] [State] [State] │ │

│ │ └─────────────────────────────────────┘ │ │

│ └─────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ ┌─────────────────────────────────────────┐ │

│ │ Recording │ │

│ │ • state_hash: u64 │ │

│ │ • frames: Vec<FrameInputs> │ │

│ │ • snapshots: Vec<StateSnapshot> │ │

│ └─────────────────────────────────────────┘ │

│ │ │

│ ┌───────────────┼───────────────┐ │

│ ▼ ▼ ▼ │

│ ┌───────────┐ ┌───────────┐ ┌───────────┐ │

│ │ Replay │ │ Invariant │ │ Coverage │ │

│ │ Verify │ │ Check │ │ Report │ │

│ └───────────┘ └───────────┘ └───────────┘ │

│ │

└─────────────────────────────────────────────────────────────────┘

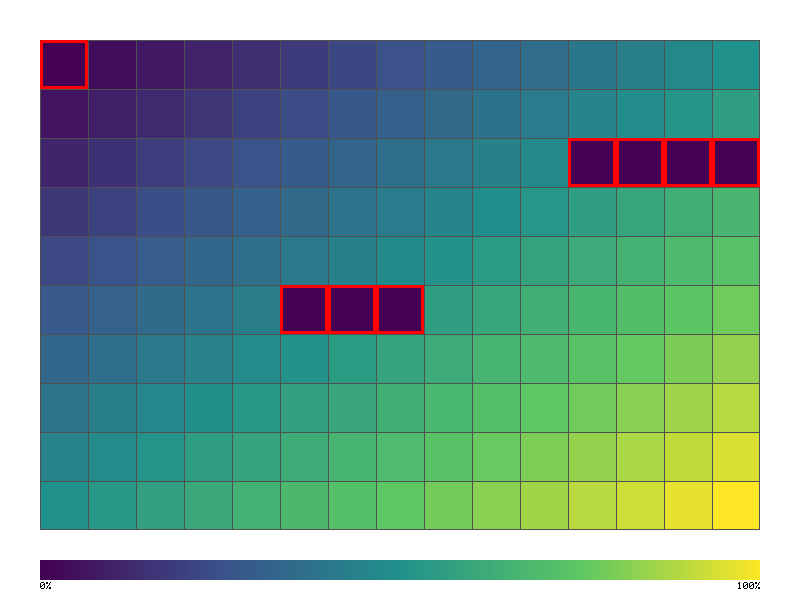

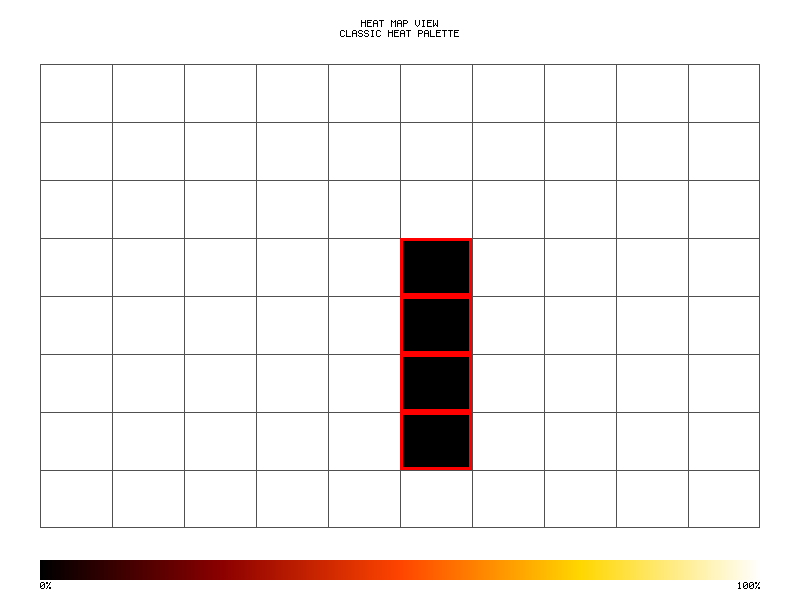

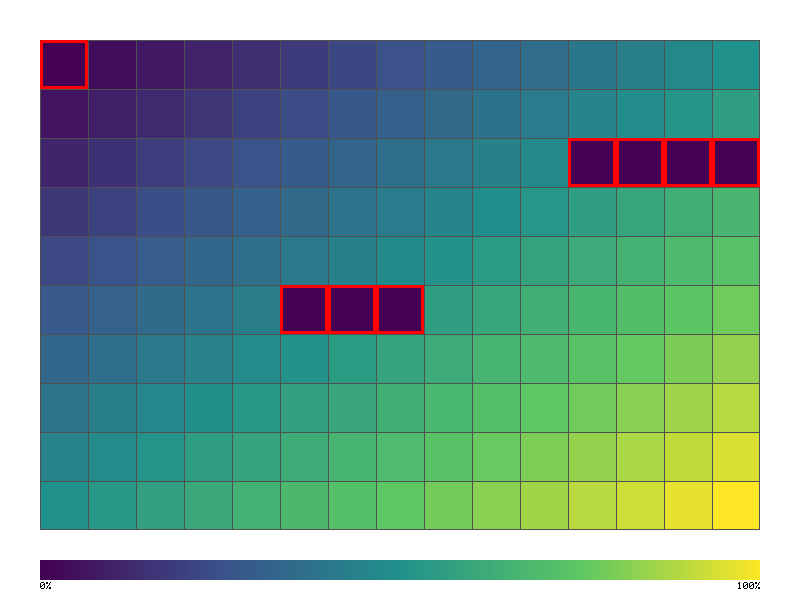

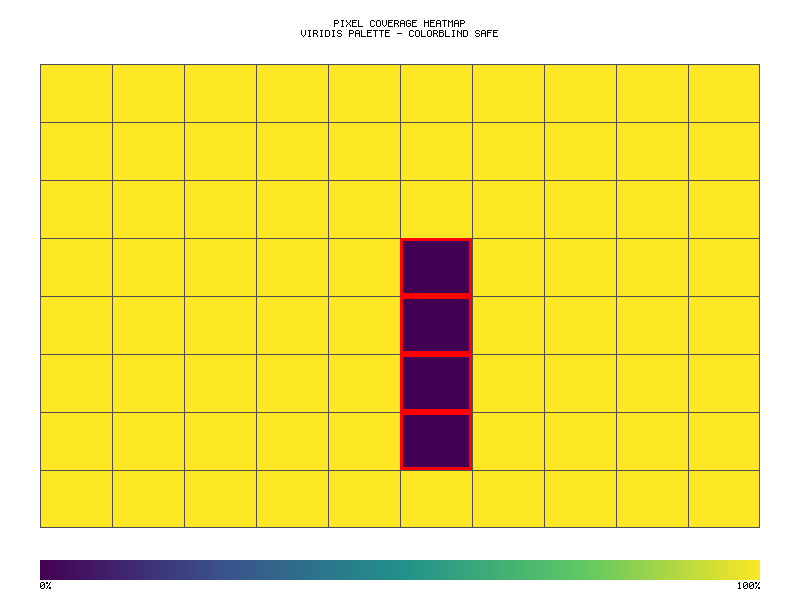

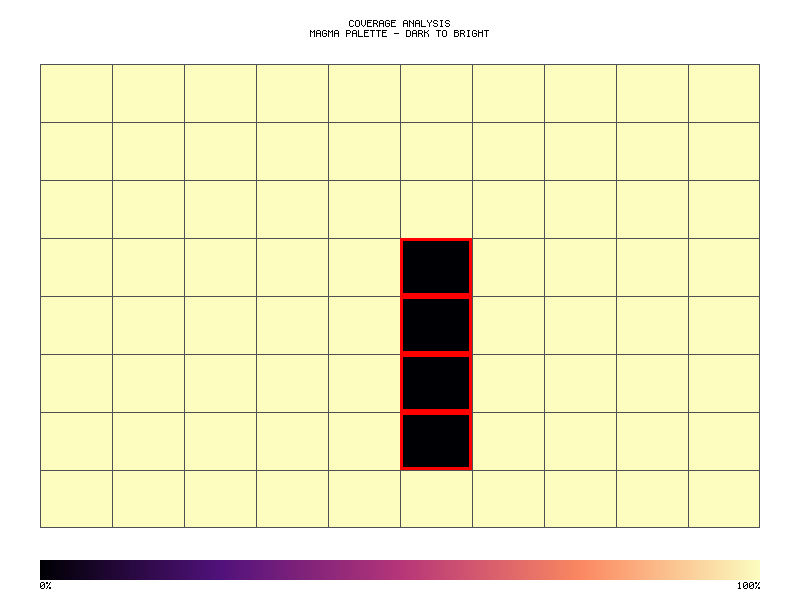

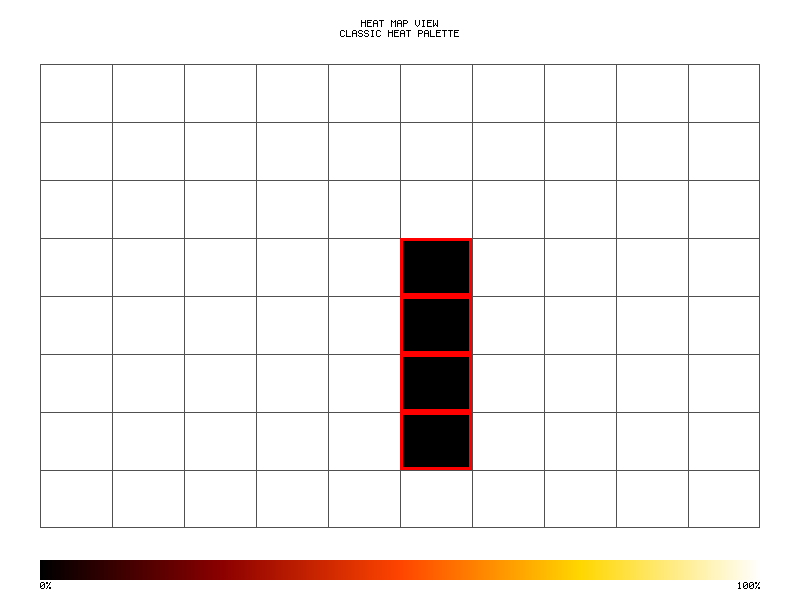

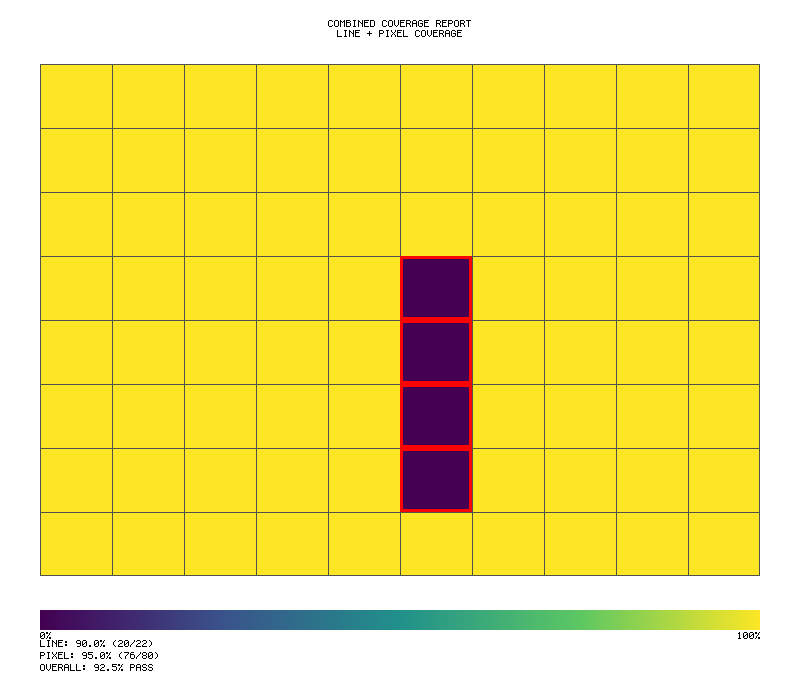

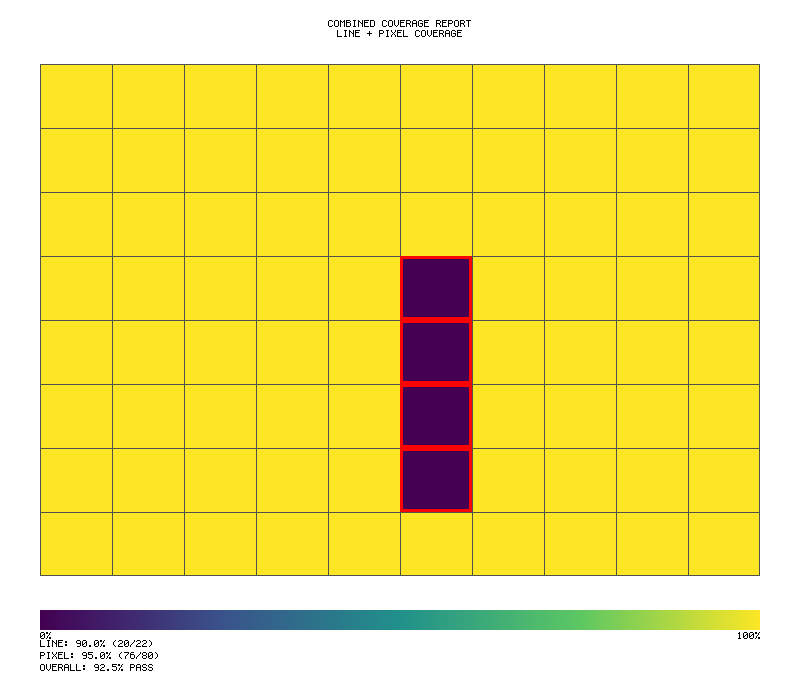

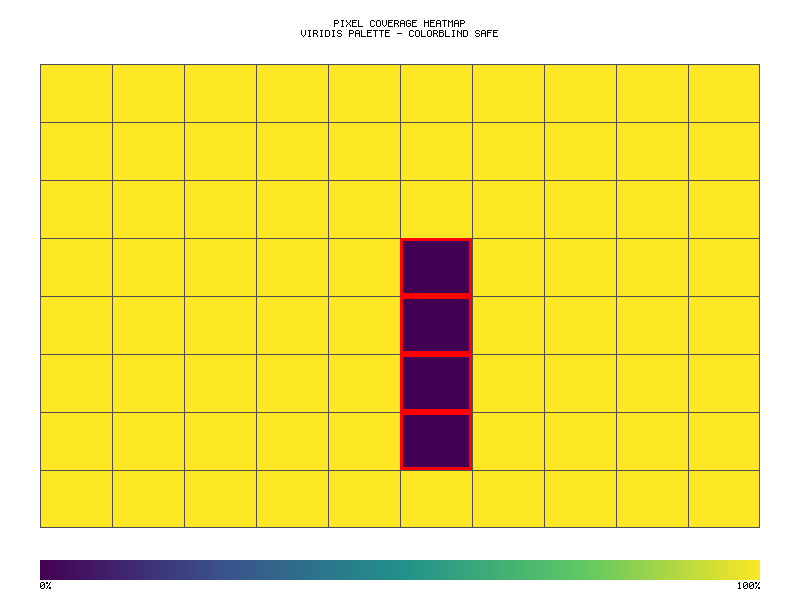

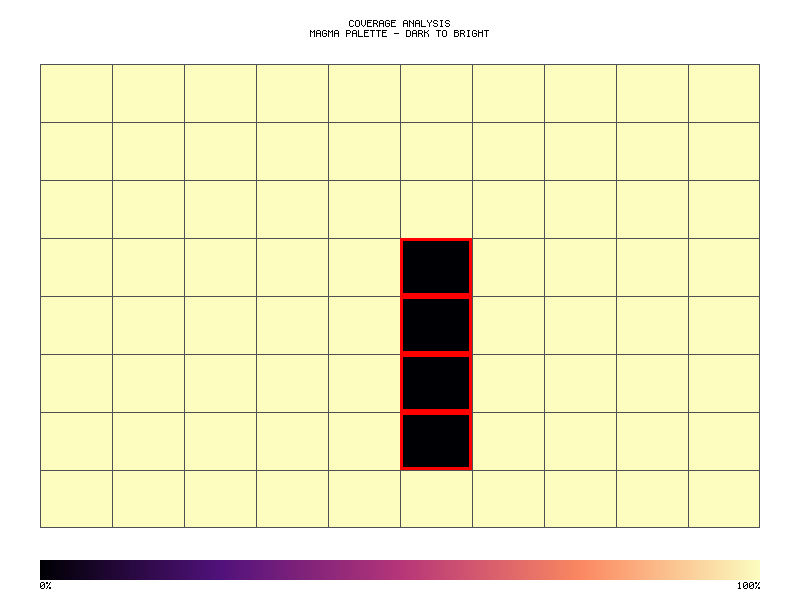

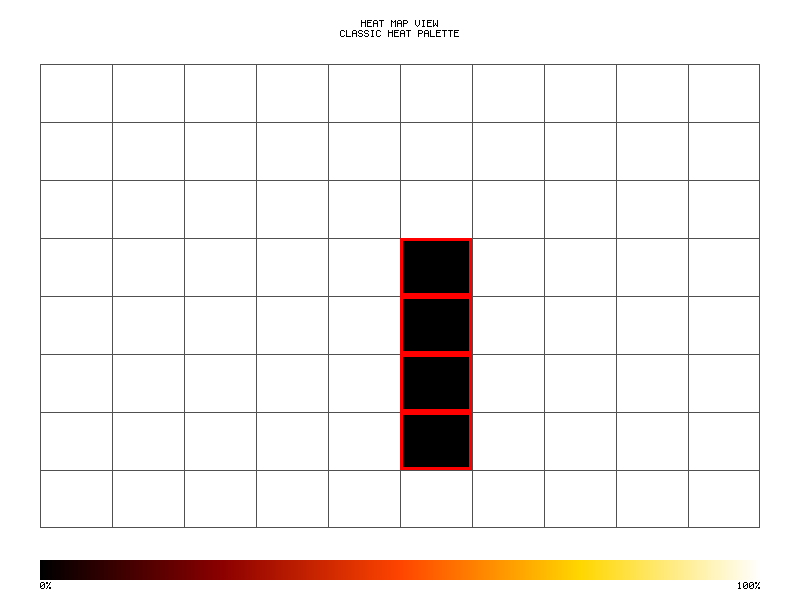

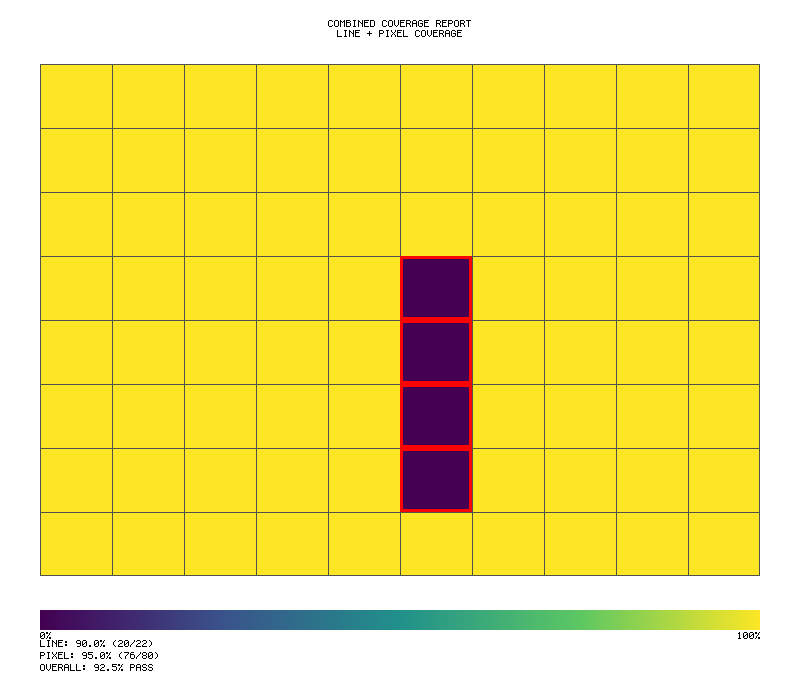

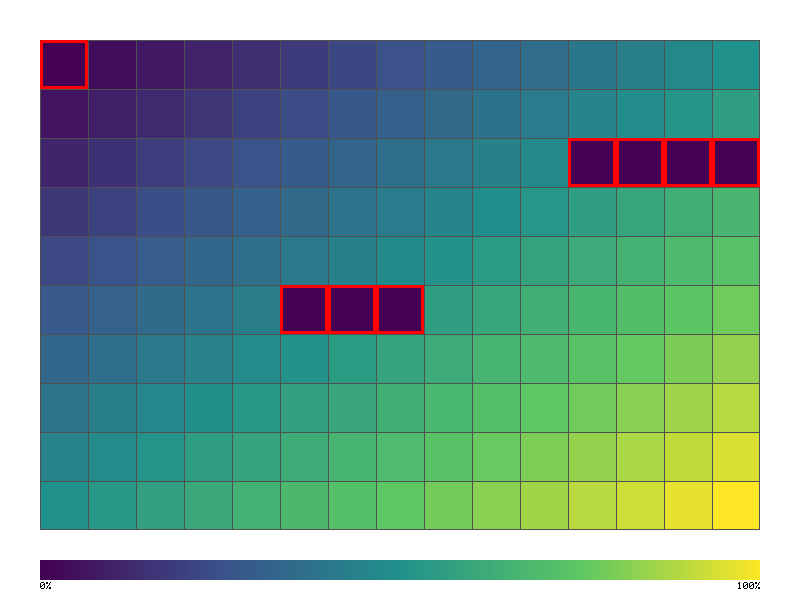

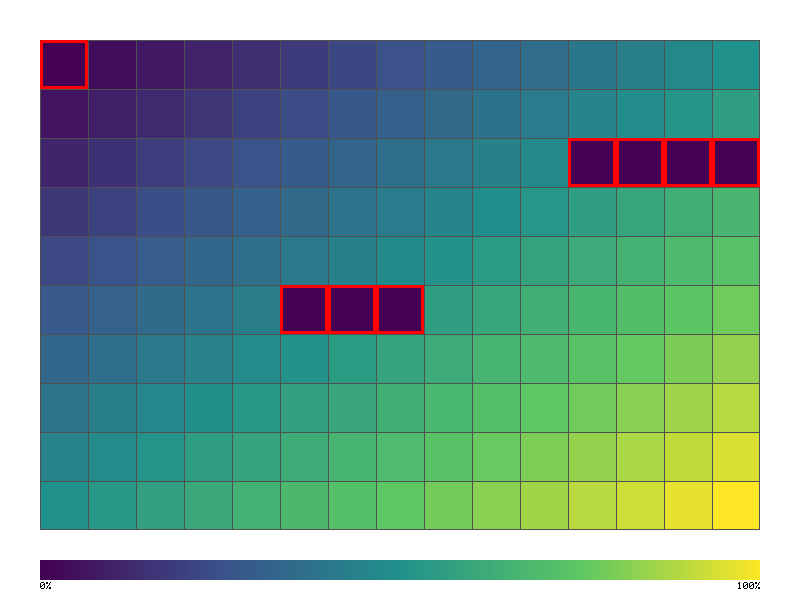

Simulation runs generate coverage heat maps showing execution hotspots

Basic Simulation

#![allow(unused)] fn main() { use jugar_probar::{run_simulation, SimulationConfig}; let config = SimulationConfig::new(seed, num_frames); let result = run_simulation(config, |frame| { // Return inputs for this frame vec![] // No inputs }); assert!(result.completed); println!("Final state hash: {}", result.state_hash); }

Simulation with Inputs

#![allow(unused)] fn main() { use jugar_probar::{run_simulation, SimulationConfig, InputEvent}; let config = SimulationConfig::new(42, 300); let result = run_simulation(config, |frame| { // Move paddle up for first 100 frames if frame < 100 { vec![InputEvent::key_held("KeyW")] } else { vec![] } }); }

Input Events

#![allow(unused)] fn main() { // Keyboard InputEvent::key_press("Space") // Just pressed InputEvent::key_held("KeyW") // Held down InputEvent::key_release("Escape") // Just released // Mouse InputEvent::mouse_move(400.0, 300.0) InputEvent::mouse_press(MouseButton::Left) InputEvent::mouse_release(MouseButton::Left) // Touch InputEvent::touch_start(0, 100.0, 200.0) // id, x, y InputEvent::touch_move(0, 150.0, 250.0) InputEvent::touch_end(0) }

Deterministic Replay

#![allow(unused)] fn main() { use jugar_probar::{run_simulation, run_replay, SimulationConfig}; // Record a simulation let config = SimulationConfig::new(42, 500); let recording = run_simulation(config, |frame| { vec![InputEvent::key_press("ArrowUp")] }); // Replay it let replay = run_replay(&recording); // Verify determinism assert!(replay.determinism_verified); assert_eq!(recording.state_hash, replay.state_hash); }

Simulation Config

#![allow(unused)] fn main() { pub struct SimulationConfig { pub seed: u64, // Random seed for reproducibility pub frames: u32, // Number of frames to simulate pub fixed_dt: f32, // Timestep (default: 1/60) pub max_time: f32, // Max real time (for timeout) } let config = SimulationConfig { seed: 12345, frames: 1000, fixed_dt: 1.0 / 60.0, max_time: 60.0, }; }

Simulation Result

#![allow(unused)] fn main() { pub struct SimulationResult { pub completed: bool, pub frames_run: u32, pub state_hash: u64, pub final_state: GameState, pub recording: Recording, pub events: Vec<GameEvent>, } }

Recording Format

#![allow(unused)] fn main() { pub struct Recording { pub seed: u64, pub frames: Vec<FrameInputs>, pub state_snapshots: Vec<StateSnapshot>, } pub struct FrameInputs { pub frame: u32, pub inputs: Vec<InputEvent>, } }

Invariant Checking

#![allow(unused)] fn main() { use jugar_probar::{run_simulation_with_invariants, Invariant}; let invariants = vec![ Invariant::new("ball_in_bounds", |state| { state.ball.x >= 0.0 && state.ball.x <= 800.0 && state.ball.y >= 0.0 && state.ball.y <= 600.0 }), Invariant::new("score_valid", |state| { state.score_left <= 10 && state.score_right <= 10 }), ]; let result = run_simulation_with_invariants(config, invariants, |_| vec![]); assert!(result.all_invariants_held); for violation in &result.violations { println!("Violation at frame {}: {}", violation.frame, violation.invariant); } }

Scenario Testing

#![allow(unused)] fn main() { #[test] fn test_game_scenarios() { let scenarios = vec![ ("player_wins", |f| if f < 500 { vec![key("KeyW")] } else { vec![] }), ("ai_wins", |_| vec![]), // No player input ("timeout", |_| vec![key("KeyP")]), // Pause ]; for (name, input_fn) in scenarios { let config = SimulationConfig::new(42, 1000); let result = run_simulation(config, input_fn); println!("Scenario '{}': score = {} - {}", name, result.final_state.score_left, result.final_state.score_right); } } }

Performance Benchmarking

#![allow(unused)] fn main() { use std::time::Instant; #[test] fn benchmark_simulation() { let config = SimulationConfig::new(42, 10000); let start = Instant::now(); let result = run_simulation(config, |_| vec![]); let elapsed = start.elapsed(); println!("10000 frames in {:?}", elapsed); println!("FPS: {}", 10000.0 / elapsed.as_secs_f64()); // Should run faster than real-time assert!(elapsed.as_secs_f64() < 10000.0 / 60.0); } }

Trueno Simulation Primitives

Probar's simulation testing is powered by trueno's simulation module (v0.8.5+), which provides Toyota Production System-based testing primitives.

SimRng: Deterministic RNG

The SimRng provides PCG-based deterministic random number generation:

#![allow(unused)] fn main() { use trueno::simulation::SimRng; // Same seed = same sequence, always let mut rng = SimRng::new(42); let value = rng.next_f32(); // Deterministic [0.0, 1.0) let range = rng.range(1.0, 10.0); // Deterministic range let normal = rng.normal(0.0, 1.0); // Deterministic Gaussian // Fork for parallel testing (child has deterministic offset) let child_rng = rng.fork(); }

JidokaGuard: Quality Gates

Stop-on-defect quality checking inspired by Toyota's Jidoka principle:

#![allow(unused)] fn main() { use trueno::simulation::JidokaGuard; let guard = JidokaGuard::new(); // Automatic NaN/Inf detection guard.check_finite(&game_state.ball_velocity)?; // Custom invariants guard.assert_invariant( || score <= MAX_SCORE, "Score exceeded maximum" )?; }

BackendTolerance: Cross-Platform Validation

Ensure simulation results are consistent across different compute backends:

#![allow(unused)] fn main() { use trueno::simulation::BackendTolerance; let tolerance = BackendTolerance::relaxed(); // Compare GPU vs CPU simulation results let tol = tolerance.for_backends(Backend::GPU, Backend::Scalar); assert!((gpu_state_hash - cpu_state_hash).abs() < tol); }

BufferRenderer: Visual Regression

Render simulation state to RGBA buffers for visual regression testing:

#![allow(unused)] fn main() { use trueno::simulation::{BufferRenderer, ColorPalette}; let renderer = BufferRenderer::new(800, 600); let buffer = renderer.render_heatmap(&coverage_data, &ColorPalette::viridis())?; // Compare with golden baseline let diff = renderer.compare_buffers(&buffer, &golden_buffer)?; assert!(diff.max_error < 1e-5, "Visual regression detected"); }

Integration with Jugar

Probar's simulation integrates with jugar game engine:

#![allow(unused)] fn main() { use jugar::GameState; use jugar_probar::{run_simulation, SimulationConfig}; use trueno::simulation::SimRng; // Jugar uses trueno's SimRng internally for determinism let config = SimulationConfig::new(42, 1000); let result = run_simulation(config, |frame| { // Deterministic input generation vec![InputEvent::key_press("Space")] }); // Same seed + same inputs = same final state (guaranteed) assert_eq!(result.state_hash, expected_hash); }

Deterministic Replay

Probar enables frame-perfect replay of game sessions using trueno's SimRng (PCG-based deterministic RNG) to guarantee reproducibility across platforms and runs.

Replay sessions build comprehensive coverage maps over time

Why Deterministic Replay?

- Bug Reproduction: Replay exact sequence that caused a bug

- Regression Testing: Verify behavior matches after changes

- Test Generation: Record gameplay, convert to tests

- Demo Playback: Record and replay gameplay sequences

Recording a Session

#![allow(unused)] fn main() { use jugar_probar::{Recorder, Recording}; let mut recorder = Recorder::new(seed); let mut platform = WebPlatform::new_for_test(config); // Play game and record for frame in 0..1000 { let inputs = get_user_inputs(); recorder.record_frame(frame, &inputs); platform.process_inputs(&inputs); platform.advance_frame(1.0 / 60.0); } // Get recording let recording = recorder.finish(); // Save to file recording.save("gameplay.replay")?; }

Replaying a Session

#![allow(unused)] fn main() { use jugar_probar::{Recording, Replayer}; // Load recording let recording = Recording::load("gameplay.replay")?; let mut replayer = Replayer::new(&recording); let mut platform = WebPlatform::new_for_test(config); // Replay exactly while let Some(inputs) = replayer.next_frame() { platform.process_inputs(&inputs); platform.advance_frame(1.0 / 60.0); } // Verify final state matches assert_eq!( replayer.expected_final_hash(), platform.state_hash() ); }

Recording Format

#![allow(unused)] fn main() { pub struct Recording { pub version: u32, pub seed: u64, pub config: GameConfig, pub frames: Vec<FrameData>, pub final_state_hash: u64, } pub struct FrameData { pub frame_number: u32, pub inputs: Vec<InputEvent>, pub state_hash: Option<u64>, // Optional checkpoints } }

State Hashing

#![allow(unused)] fn main() { // Hash game state for comparison let hash = platform.state_hash(); // Or hash specific components let ball_hash = hash_state(&platform.get_ball_state()); let score_hash = hash_state(&platform.get_score()); }

Verification

#![allow(unused)] fn main() { use jugar_probar::{verify_replay, ReplayVerification}; let result = verify_replay(&recording); match result { ReplayVerification::Perfect => { println!("Replay is deterministic!"); } ReplayVerification::Diverged { frame, expected, actual } => { println!("Diverged at frame {}", frame); println!("Expected hash: {}", expected); println!("Actual hash: {}", actual); } ReplayVerification::Failed(error) => { println!("Replay failed: {}", error); } } }

Checkpoints

Add checkpoints for faster debugging:

#![allow(unused)] fn main() { let mut recorder = Recorder::new(seed) .with_checkpoint_interval(60); // Every 60 frames // Or manual checkpoints recorder.add_checkpoint(platform.snapshot()); }

Binary Replay Format

#![allow(unused)] fn main() { // Compact binary format for storage let bytes = recording.to_bytes(); let recording = Recording::from_bytes(&bytes)?; // Compressed let compressed = recording.to_compressed_bytes(); let recording = Recording::from_compressed_bytes(&compressed)?; }

Replay Speed Control

#![allow(unused)] fn main() { let mut replayer = Replayer::new(&recording); // Normal speed replayer.set_speed(1.0); // Fast forward replayer.set_speed(4.0); // Slow motion replayer.set_speed(0.25); // Step by step replayer.step(); // Advance one frame }

Example: Test from Replay

#![allow(unused)] fn main() { #[test] fn test_from_recorded_gameplay() { let recording = Recording::load("tests/fixtures/win_game.replay").unwrap(); let mut replayer = Replayer::new(&recording); let mut platform = WebPlatform::new_for_test(recording.config.clone()); // Replay all frames while let Some(inputs) = replayer.next_frame() { platform.process_inputs(&inputs); platform.advance_frame(1.0 / 60.0); } // Verify end state let state = platform.get_game_state(); assert_eq!(state.winner, Some(Player::Left)); assert_eq!(state.score_left, 10); } }

CI Integration

# Verify all replay files are still deterministic

cargo test replay_verification -- --include-ignored

# Or via make

make verify-replays

Debugging with Replays

#![allow(unused)] fn main() { // Find frame where bug occurs let bug_frame = binary_search_replay(&recording, |state| { state.ball.y < 0.0 // Bug condition }); println!("Bug first occurs at frame {}", bug_frame); // Get inputs leading up to bug let inputs = recording.frames[..bug_frame].to_vec(); println!("Inputs: {:?}", inputs); }

Determinism Guarantees via Trueno

Probar's deterministic replay is powered by trueno's simulation testing framework (v0.8.5+):

SimRng: PCG-Based Determinism

All randomness in simulations uses trueno::simulation::SimRng:

#![allow(unused)] fn main() { use trueno::simulation::SimRng; // PCG algorithm guarantees identical sequences across: // - Different operating systems (Linux, macOS, Windows) // - Different CPU architectures (x86_64, ARM64, WASM) // - Different compiler versions let mut rng = SimRng::new(recording.seed); // Every call produces identical results given same seed let ball_angle = rng.range(0.0, std::f32::consts::TAU); let spawn_delay = rng.range(30, 120); // frames }

Cross-Backend Consistency

Trueno ensures consistent results even when switching compute backends:

#![allow(unused)] fn main() { use trueno::simulation::BackendTolerance; // Simulation results are identical whether running on: // - CPU Scalar backend // - SIMD (SSE2/AVX2/AVX-512/NEON) // - GPU (via wgpu) let tolerance = BackendTolerance::strict(); assert!(verify_cross_backend_determinism(&recording, tolerance)); }

JidokaGuard: Replay Validation

Automatic quality checks during replay:

#![allow(unused)] fn main() { use trueno::simulation::JidokaGuard; let guard = JidokaGuard::new(); // Automatically detects non-determinism sources guard.check_finite(&state)?; // NaN/Inf corrupts determinism guard.assert_invariant( || state.frame == expected_frame, "Frame count mismatch - possible non-determinism" )?; }

Why SimRng over std::rand?

| Feature | SimRng (trueno) | std::rand |

|---|---|---|

| Cross-platform identical | Yes | No (implementation-defined) |

| WASM compatible | Yes | Requires getrandom |

| Fork for parallelism | Yes (deterministic) | No |

| Serializable state | Yes | No |

| Performance | ~2ns/call | ~3ns/call |

Media Recording

Toyota Way: Mieruka (Visibility) - Visual test recordings for review

Probar provides comprehensive media recording capabilities for visual test verification and debugging.

Overview

- GIF Recording - Animated recordings of test execution

- PNG Screenshots - High-quality static screenshots with annotations

- SVG Export - Resolution-independent vector graphics

- MP4 Video - Full motion video with audio (if applicable)

See Also

GIF Recording

Toyota Way: Mieruka (Visibility) - Animated test recordings

Record animated GIF recordings of test execution for visual review and debugging.

Basic Usage

#![allow(unused)] fn main() { use probar::media::{GifConfig, GifRecorder, GifFrame}; let config = GifConfig::new(320, 240); let mut recorder = GifRecorder::new(config); // Add frames during test execution for screenshot in screenshots { let frame = GifFrame::new(screenshot.pixels, 100); // 100ms delay recorder.add_frame(frame); } let gif_data = recorder.encode()?; }

PNG Screenshots

Toyota Way: Genchi Genbutsu (Go and See) - Visual evidence of test state

Capture high-quality PNG screenshots with metadata and annotations.

Basic Usage

#![allow(unused)] fn main() { use probar::media::{PngExporter, PngMetadata, Annotation, CompressionLevel}; let exporter = PngExporter::new() .with_compression(CompressionLevel::Best) .with_metadata(PngMetadata::new() .with_title("Test Screenshot") .with_test_name("login_test")); let png_data = exporter.export(&screenshot)?; }

Annotations

#![allow(unused)] fn main() { let annotations = vec![ Annotation::rectangle(50, 50, 100, 80) .with_color(255, 0, 0, 255) .with_label("Error area"), Annotation::circle(400, 200, 60) .with_color(0, 255, 0, 255), ]; let annotated = exporter.export_with_annotations(&screenshot, &annotations)?; }

SVG Export

Toyota Way: Poka-Yoke (Mistake-Proofing) - Scalable vector output

Generate resolution-independent SVG screenshots for documentation and scaling.

Basic Usage

#![allow(unused)] fn main() { use probar::media::{SvgConfig, SvgExporter, SvgShape}; let config = SvgConfig::new(800, 600); let mut exporter = SvgExporter::new(config); exporter.add_shape(SvgShape::rect(50.0, 50.0, 200.0, 100.0) .with_fill("#3498db") .with_stroke("#2980b9")); let svg_content = exporter.export()?; }

MP4 Video

Toyota Way: Genchi Genbutsu (Go and See) - Full motion capture of tests

Record full motion MP4 video of test execution with configurable quality settings.

Basic Usage

#![allow(unused)] fn main() { use probar::media::{VideoConfig, VideoRecorder, VideoCodec}; let config = VideoConfig::new(640, 480) .with_fps(30) .with_bitrate(2_000_000) .with_codec(VideoCodec::H264); let mut recorder = VideoRecorder::new(config); recorder.start()?; // Capture frames during test for frame in frames { recorder.capture_raw_frame(&pixels, width, height, timestamp_ms)?; } let video_data = recorder.stop()?; }

Network Interception

Toyota Way: Poka-Yoke (Mistake-Proofing) - Type-safe request handling

Intercept and mock HTTP requests for isolated testing.

Running the Example

cargo run --example network_intercept

Basic Usage

#![allow(unused)] fn main() { use probar::prelude::*; // Create network interceptor let mut interceptor = NetworkInterceptionBuilder::new() .capture_all() // Capture all requests .block_unmatched() // Block unmatched requests .build(); // Add mock routes interceptor.get("/api/users", MockResponse::json(&serde_json::json!({ "users": [{"id": 1, "name": "Alice"}] }))?); interceptor.post("/api/users", MockResponse::new().with_status(201)); // Start interception interceptor.start(); }

URL Patterns

#![allow(unused)] fn main() { use probar::network::UrlPattern; // Exact match let exact = UrlPattern::Exact("https://api.example.com/users".into()); // Prefix match let prefix = UrlPattern::Prefix("https://api.example.com/".into()); // Contains substring let contains = UrlPattern::Contains("/api/".into()); // Glob pattern let glob = UrlPattern::Glob("https://api.example.com/*".into()); // Regex pattern let regex = UrlPattern::Regex(r"https://.*\.example\.com/.*".into()); // Match any let any = UrlPattern::Any; }

Mock Responses

#![allow(unused)] fn main() { use probar::network::MockResponse; // Simple text response let text = MockResponse::text("Hello, World!"); // JSON response let json = MockResponse::json(&serde_json::json!({ "status": "success", "data": {"id": 123} }))?; // Error response let error = MockResponse::error(404, "Not Found"); // Custom response with builder let custom = MockResponse::new() .with_status(200) .with_header("Content-Type", "application/json") .with_header("X-Custom", "value") .with_body(br#"{"key": "value"}"#.to_vec()) .with_delay(100); // 100ms delay }

Request Abort (PMAT-006)

Block requests with specific error reasons (Playwright parity):

#![allow(unused)] fn main() { use probar::network::{NetworkInterception, AbortReason, UrlPattern}; let mut interceptor = NetworkInterception::new(); // Block tracking and ads interceptor.abort("/analytics", AbortReason::BlockedByClient); interceptor.abort("/tracking", AbortReason::BlockedByClient); interceptor.abort("/ads", AbortReason::BlockedByClient); // Simulate network failures interceptor.abort_pattern( UrlPattern::Contains("unreachable.com".into()), AbortReason::ConnectionFailed, ); interceptor.abort_pattern( UrlPattern::Contains("timeout.com".into()), AbortReason::TimedOut, ); interceptor.start(); }

Abort Reasons

| Reason | Error Code | Description |

|---|---|---|

Failed | net::ERR_FAILED | Generic failure |

Aborted | net::ERR_ABORTED | Request aborted |

TimedOut | net::ERR_TIMED_OUT | Request timed out |

AccessDenied | net::ERR_ACCESS_DENIED | Access denied |

ConnectionClosed | net::ERR_CONNECTION_CLOSED | Connection closed |

ConnectionFailed | net::ERR_CONNECTION_FAILED | Connection failed |

ConnectionRefused | net::ERR_CONNECTION_REFUSED | Connection refused |

ConnectionReset | net::ERR_CONNECTION_RESET | Connection reset |

InternetDisconnected | net::ERR_INTERNET_DISCONNECTED | No internet |

NameNotResolved | net::ERR_NAME_NOT_RESOLVED | DNS failure |

BlockedByClient | net::ERR_BLOCKED_BY_CLIENT | Blocked by client |

Wait for Request/Response (PMAT-006)

#![allow(unused)] fn main() { use probar::network::{NetworkInterception, UrlPattern}; let mut interceptor = NetworkInterception::new().capture_all(); interceptor.start(); // ... trigger some network activity ... // Find captured request let pattern = UrlPattern::Contains("api/users".into()); if let Some(request) = interceptor.find_request(&pattern) { println!("Found request: {}", request.url); println!("Method: {:?}", request.method); } // Find response for pattern if let Some(response) = interceptor.find_response_for(&pattern) { println!("Status: {}", response.status); println!("Body: {}", response.body_string()); } // Get all captured responses let responses = interceptor.captured_responses(); println!("Total responses: {}", responses.len()); }

Assertions

#![allow(unused)] fn main() { use probar::network::{NetworkInterception, UrlPattern}; let mut interceptor = NetworkInterception::new().capture_all(); interceptor.start(); // ... trigger network activity ... // Assert request was made interceptor.assert_requested(&UrlPattern::Contains("/api/users".into()))?; // Assert request count interceptor.assert_requested_times(&UrlPattern::Contains("/api/".into()), 3)?; // Assert request was NOT made interceptor.assert_not_requested(&UrlPattern::Contains("/admin".into()))?; }

Route Management

#![allow(unused)] fn main() { use probar::network::{NetworkInterception, Route, UrlPattern, HttpMethod, MockResponse}; let mut interceptor = NetworkInterception::new(); // Add route directly let route = Route::new( UrlPattern::Contains("/api/users".into()), HttpMethod::Get, MockResponse::text("users data"), ).times(2); // Only match twice interceptor.route(route); // Check route count println!("Routes: {}", interceptor.route_count()); // Clear all routes interceptor.clear_routes(); // Clear captured requests interceptor.clear_captured(); }

HTTP Methods

#![allow(unused)] fn main() { use probar::network::HttpMethod; // Available methods let get = HttpMethod::Get; let post = HttpMethod::Post; let put = HttpMethod::Put; let delete = HttpMethod::Delete; let patch = HttpMethod::Patch; let head = HttpMethod::Head; let options = HttpMethod::Options; let any = HttpMethod::Any; // Matches any method // Parse from string let method = HttpMethod::from_str("POST"); // Convert to string let s = method.as_str(); // "POST" // Check if methods match assert!(HttpMethod::Any.matches(&HttpMethod::Get)); }

Example: Testing API Calls

#![allow(unused)] fn main() { use probar::prelude::*; fn test_user_api() -> ProbarResult<()> { let mut interceptor = NetworkInterceptionBuilder::new() .capture_all() .build(); // Mock API responses interceptor.get("/api/users", MockResponse::json(&serde_json::json!({ "users": [ {"id": 1, "name": "Alice"}, {"id": 2, "name": "Bob"} ] }))?); interceptor.post("/api/users", MockResponse::new() .with_status(201) .with_json(&serde_json::json!({"id": 3, "name": "Charlie"}))?); interceptor.delete("/api/users/1", MockResponse::new().with_status(204)); // Block external tracking interceptor.abort("/analytics", AbortReason::BlockedByClient); interceptor.start(); // ... run your tests ... // Verify API calls interceptor.assert_requested(&UrlPattern::Contains("/api/users".into()))?; Ok(()) } }

WebSocket Testing

Toyota Way: Genchi Genbutsu (Go and See) - Monitor real-time connections

Monitor and test WebSocket connections with message capture, mocking, and state tracking.

Running the Example

cargo run --example websocket_monitor

Quick Start

#![allow(unused)] fn main() { use probar::websocket::{WebSocketMonitor, WebSocketMessage}; // Create a WebSocket monitor let monitor = WebSocketMonitor::new(); // Monitor messages monitor.on_message(|msg| { println!("Message: {} - {:?}", msg.direction, msg.data); }); // Start monitoring monitor.start("ws://localhost:8080/game")?; }

WebSocket Monitor

#![allow(unused)] fn main() { use probar::websocket::{WebSocketMonitor, WebSocketMonitorBuilder}; // Build a monitor with options let monitor = WebSocketMonitorBuilder::new() .capture_binary(true) .capture_text(true) .max_messages(1000) .on_open(|| println!("Connected")) .on_close(|| println!("Disconnected")) .on_error(|e| eprintln!("Error: {}", e)) .build(); // Get captured messages let messages = monitor.messages(); println!("Captured {} messages", messages.len()); }

WebSocket Messages

#![allow(unused)] fn main() { use probar::websocket::{WebSocketMessage, MessageDirection, MessageType}; // Message structure let message = WebSocketMessage { direction: MessageDirection::Incoming, message_type: MessageType::Text, data: r#"{"action": "move", "x": 100, "y": 200}"#.to_string(), timestamp_ms: 1234567890, }; // Check direction match message.direction { MessageDirection::Incoming => println!("Server → Client"), MessageDirection::Outgoing => println!("Client → Server"), } // Check type match message.message_type { MessageType::Text => println!("Text message: {}", message.data), MessageType::Binary => println!("Binary message ({} bytes)", message.data.len()), } }

Message Direction

#![allow(unused)] fn main() { use probar::websocket::MessageDirection; // Message directions let directions = [ MessageDirection::Incoming, // Server to client MessageDirection::Outgoing, // Client to server ]; // Filter by direction fn filter_incoming(messages: &[probar::websocket::WebSocketMessage]) -> Vec<&probar::websocket::WebSocketMessage> { messages.iter() .filter(|m| m.direction == MessageDirection::Incoming) .collect() } }

WebSocket State

#![allow(unused)] fn main() { use probar::websocket::WebSocketState; // Connection states let states = [ WebSocketState::Connecting, // Connection in progress WebSocketState::Connected, // Connected and ready WebSocketState::Closing, // Close in progress WebSocketState::Closed, // Connection closed ]; // Monitor state changes fn describe_state(state: WebSocketState) { match state { WebSocketState::Connecting => println!("Connecting..."), WebSocketState::Connected => println!("Ready to send/receive"), WebSocketState::Closing => println!("Closing connection"), WebSocketState::Closed => println!("Connection closed"), } } }

WebSocket Mocking

#![allow(unused)] fn main() { use probar::websocket::{WebSocketMock, MockWebSocketResponse}; // Create a mock WebSocket server let mock = WebSocketMock::new() .on_connect(|| { MockWebSocketResponse::send(r#"{"type": "welcome"}"#) }) .on_message("ping", || { MockWebSocketResponse::send(r#"{"type": "pong"}"#) }) .on_message_pattern(r"move:(\d+),(\d+)", |captures| { let x = captures.get(1).map(|m| m.as_str()).unwrap_or("0"); let y = captures.get(2).map(|m| m.as_str()).unwrap_or("0"); MockWebSocketResponse::send(format!(r#"{{"type": "moved", "x": {}, "y": {}}}"#, x, y)) }); // Use in tests // let response = mock.handle_message("ping"); // assert_eq!(response.data, r#"{"type": "pong"}"#); }

WebSocket Connection

#![allow(unused)] fn main() { use probar::websocket::WebSocketConnection; // Track connection details let connection = WebSocketConnection { url: "ws://localhost:8080/game".to_string(), protocol: Some("game-protocol-v1".to_string()), state: probar::websocket::WebSocketState::Connected, messages_sent: 42, messages_received: 38, bytes_sent: 2048, bytes_received: 1536, }; println!("URL: {}", connection.url); println!("Protocol: {:?}", connection.protocol); println!("Messages: {} sent, {} received", connection.messages_sent, connection.messages_received); }

Testing Game Protocol

#![allow(unused)] fn main() { use probar::websocket::{WebSocketMonitor, MessageDirection}; fn test_game_protocol() { let monitor = WebSocketMonitor::new(); // Connect to game server // monitor.start("ws://localhost:8080/game")?; // Send player action // monitor.send(r#"{"action": "join", "player": "test"}"#)?; // Wait for response // let response = monitor.wait_for_message(|msg| { // msg.direction == MessageDirection::Incoming // && msg.data.contains("joined") // })?; // Verify protocol // assert!(response.data.contains(r#""status": "ok""#)); } }

Message Assertions

#![allow(unused)] fn main() { use probar::websocket::{WebSocketMonitor, WebSocketMessage}; fn assert_message_received(monitor: &WebSocketMonitor, expected_type: &str) { let messages = monitor.messages(); let found = messages.iter().any(|msg| { msg.data.contains(&format!(r#""type": "{}""#, expected_type)) }); assert!(found, "Expected message type '{}' not found", expected_type); } fn assert_message_count(monitor: &WebSocketMonitor, expected: usize) { let actual = monitor.messages().len(); assert_eq!(actual, expected, "Expected {} messages, got {}", expected, actual); } }

Binary Messages

#![allow(unused)] fn main() { use probar::websocket::{WebSocketMessage, MessageType}; // Handle binary messages (e.g., game state updates) fn handle_binary(message: &WebSocketMessage) { if message.message_type == MessageType::Binary { // Binary data is base64 encoded // let bytes = base64::decode(&message.data)?; // Parse game state from bytes } } // Send binary data fn send_binary(monitor: &probar::websocket::WebSocketMonitor, data: &[u8]) { let encoded = base64::encode(data); // monitor.send_binary(encoded)?; } }

Connection Lifecycle

#![allow(unused)] fn main() { use probar::websocket::WebSocketMonitor; fn test_connection_lifecycle() { let monitor = WebSocketMonitor::new(); // Test connection // assert!(monitor.connect("ws://localhost:8080").is_ok()); // assert!(monitor.is_connected()); // Test messaging // monitor.send("hello")?; // let response = monitor.wait_for_message()?; // Test disconnection // monitor.close()?; // assert!(!monitor.is_connected()); // Verify clean shutdown // assert!(monitor.close_code() == Some(1000)); // Normal closure } }

Multiplayer Game Testing

#![allow(unused)] fn main() { use probar::websocket::WebSocketMonitor; fn test_multiplayer_sync() { let player1 = WebSocketMonitor::new(); let player2 = WebSocketMonitor::new(); // Both players connect // player1.connect("ws://server/game/room1")?; // player2.connect("ws://server/game/room1")?; // Player 1 moves // player1.send(r#"{"action": "move", "x": 100}"#)?; // Player 2 should receive update // let update = player2.wait_for_message(|m| m.data.contains("player_moved"))?; // assert!(update.data.contains(r#""x": 100"#)); } }

Best Practices

- Message Validation: Verify message format before processing

- Connection Handling: Handle reconnection and errors gracefully

- Binary vs Text: Choose appropriate message types for data

- Protocol Testing: Test both client-to-server and server-to-client flows

- State Transitions: Verify connection state changes

- Cleanup: Always close connections in test teardown

Browser Contexts

Toyota Way: Heijunka (Level Loading) - Balanced resource allocation

Manage isolated browser contexts for parallel testing with independent storage, cookies, and sessions.

Running the Example

cargo run --example multi_context

Quick Start

#![allow(unused)] fn main() { use probar::{BrowserContext, ContextConfig}; // Create a context with default settings let context = BrowserContext::new(ContextConfig::default()); // Create a context with custom settings let custom = BrowserContext::new( ContextConfig::default() .with_viewport(1920, 1080) .with_locale("en-US") .with_timezone("America/New_York") ); }

Context Configuration

#![allow(unused)] fn main() { use probar::{ContextConfig, StorageState, Cookie}; // Full configuration let config = ContextConfig::default() .with_viewport(1280, 720) .with_device_scale_factor(2.0) .with_mobile(false) .with_touch_enabled(false) .with_locale("en-GB") .with_timezone("Europe/London") .with_user_agent("Mozilla/5.0 (Custom Agent)") .with_offline(false) .with_javascript_enabled(true) .with_ignore_https_errors(false); println!("Viewport: {}x{}", config.viewport_width, config.viewport_height); }

Storage State

#![allow(unused)] fn main() { use probar::{StorageState, Cookie, SameSite}; use std::collections::HashMap; // Create storage state let mut storage = StorageState::new(); // Add local storage storage.set_local_storage("session", "abc123"); storage.set_local_storage("theme", "dark"); // Add session storage storage.set_session_storage("cart", "[1,2,3]"); // Add cookies let cookie = Cookie::new("auth_token", "xyz789") .with_domain(".example.com") .with_path("/") .with_secure(true) .with_http_only(true) .with_same_site(SameSite::Strict); storage.add_cookie(cookie); // Check storage contents println!("Local storage items: {}", storage.local_storage_count()); println!("Session storage items: {}", storage.session_storage_count()); println!("Cookies: {}", storage.cookies().len()); }

Cookie Management

#![allow(unused)] fn main() { use probar::{Cookie, SameSite}; // Create a basic cookie let basic = Cookie::new("user_id", "12345"); // Create a full cookie let secure = Cookie::new("session", "abc123xyz") .with_domain(".example.com") .with_path("/app") .with_expires(1735689600) // Unix timestamp .with_secure(true) .with_http_only(true) .with_same_site(SameSite::Lax); // Check cookie properties println!("Name: {}", secure.name()); println!("Value: {}", secure.value()); println!("Domain: {:?}", secure.domain()); println!("Secure: {}", secure.secure()); println!("HttpOnly: {}", secure.http_only()); println!("SameSite: {:?}", secure.same_site()); }

Context Pool for Parallel Testing

#![allow(unused)] fn main() { use probar::{ContextPool, ContextConfig}; // Create a pool of contexts let pool = ContextPool::new(4); // 4 parallel contexts // Acquire a context for testing let context = pool.acquire(); // Run test with context // ... // Context is returned to pool when dropped // Get pool statistics let stats = pool.stats(); println!("Total contexts: {}", stats.total); println!("Available: {}", stats.available); println!("In use: {}", stats.in_use); }

Context State Management

#![allow(unused)] fn main() { use probar::{BrowserContext, ContextState}; // Create context let context = BrowserContext::default(); // Check state match context.state() { ContextState::New => println!("Fresh context"), ContextState::Active => println!("Context is running"), ContextState::Closed => println!("Context was closed"), } // Context lifecycle // context.start()?; // ... run tests ... // context.close()?; }

Multi-User Testing

#![allow(unused)] fn main() { use probar::{BrowserContext, ContextConfig, StorageState, Cookie}; fn create_user_context(user_id: &str, auth_token: &str) -> BrowserContext { let mut storage = StorageState::new(); // Set user-specific storage storage.set_local_storage("user_id", user_id); // Set auth cookie storage.add_cookie( Cookie::new("auth", auth_token) .with_domain(".example.com") .with_secure(true) ); let config = ContextConfig::default() .with_storage_state(storage); BrowserContext::new(config) } // Create contexts for different users let admin = create_user_context("admin", "admin_token_xyz"); let user1 = create_user_context("user1", "user1_token_abc"); let user2 = create_user_context("user2", "user2_token_def"); // Run parallel tests with different users // Each context is completely isolated }

Geolocation in Contexts

#![allow(unused)] fn main() { use probar::{ContextConfig, Geolocation}; // Set geolocation for context let config = ContextConfig::default() .with_geolocation(Geolocation { latitude: 37.7749, longitude: -122.4194, accuracy: Some(10.0), }) .with_permission("geolocation", "granted"); // Test location-based features }

Context Manager

#![allow(unused)] fn main() { use probar::ContextManager; // Create context manager let manager = ContextManager::new(); // Create named contexts manager.create("admin", ContextConfig::default()); manager.create("user", ContextConfig::default()); // Get context by name if let Some(ctx) = manager.get("admin") { // Use admin context } // List all contexts for name in manager.context_names() { println!("Context: {}", name); } // Close specific context manager.close("admin"); // Close all contexts manager.close_all(); }

Saving and Restoring State

#![allow(unused)] fn main() { use probar::{BrowserContext, StorageState}; // Save context state after login fn save_authenticated_state(context: &BrowserContext) -> StorageState { context.storage_state() } // Restore state in new context fn restore_state(storage: StorageState) -> BrowserContext { let config = probar::ContextConfig::default() .with_storage_state(storage); BrowserContext::new(config) } // Example: Login once, reuse state // let login_context = BrowserContext::default(); // ... perform login ... // let state = save_authenticated_state(&login_context); // // // Fast test setup - no login needed // let test_context = restore_state(state); }

Best Practices

- Isolation: Use separate contexts for tests that shouldn't share state

- Pool Sizing: Match pool size to available system resources

- State Reuse: Save auth state to avoid repeated logins

- Clean Slate: Use fresh contexts for tests requiring clean state

- Parallel Safe: Each test should use its own context

- Resource Cleanup: Ensure contexts are properly closed

- Timeout Handling: Configure appropriate timeouts per context

Device Emulation